dbMap/Web – Confidence and Quality (CQ)

dbMap/Web allows you to associate a measure of data quality to your stored data records, as well as the degree of confidence you have in the data stored there. Confidence and quality measures are part of the PPDM standard.

As quality is an objective computation, obtained from well defined rules, and confidence is somewhat subjective, one does not necessarily follows the other: you can have high quality data upon which you have no confidence at all, for instance, as you may have a lot of data items stored, but most of them coming from unreliable sources.

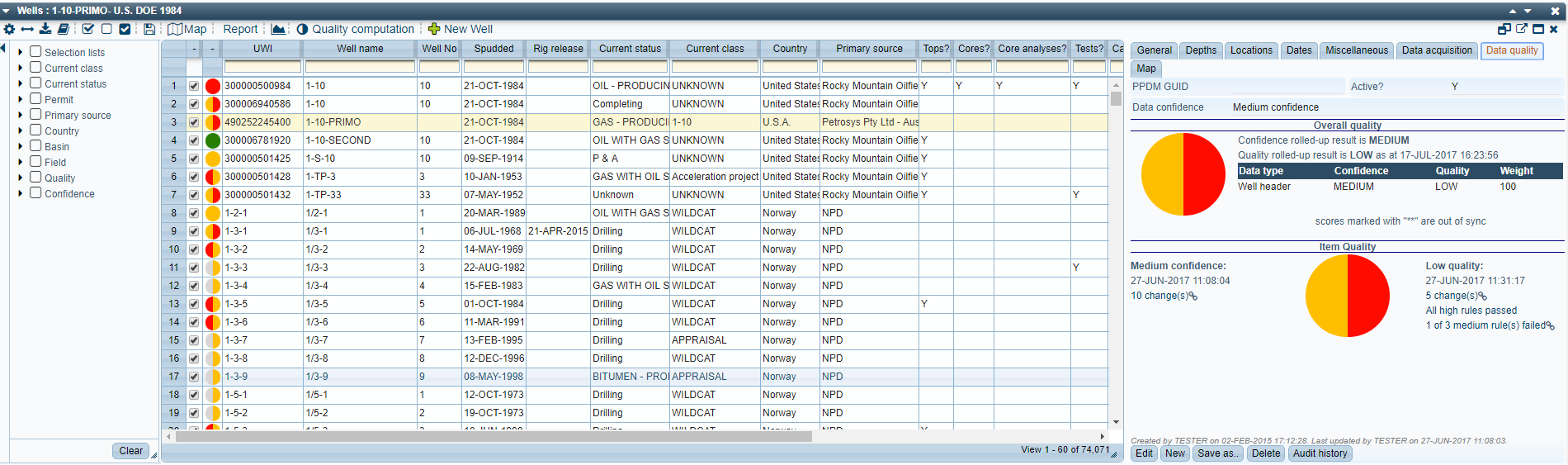

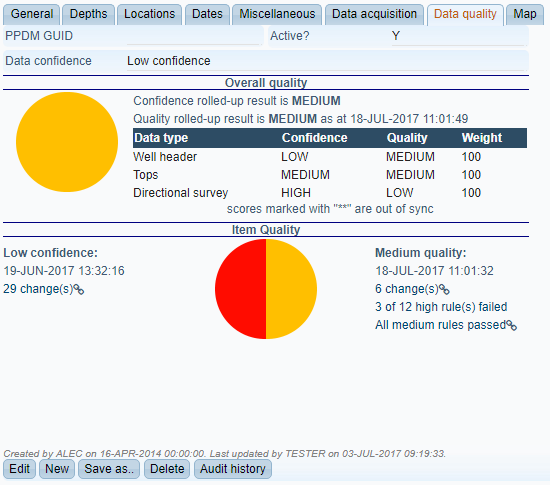

If activated for a panel, the confidence and quality (CQ, for short) controller will add a special column to each record. This column will contain a “token”, displaying the confidence level (left half circle) and the quality level (right half circle) for the record. There will also be a “data quality” tab on the right hand panel with more detailed information.

Changes to quality and confidence scores are audited, and the audit trail is available via links on the page; another link will display a computation summary for quality, with details on which quality rules passed and which failed.

Confidence and Quality Concepts

First of all, it’s important to briefly describe what we really mean by confidence and quality, as these concepts, in dbMap/Web, are not totally intuitive and can be tricky.

CONFIDENCE

Confidence is a subjective measure! The degree of confidence set by a user reflects his or her confidence on the accuracy of the available stored information, no matter how comprehensive the available information is. One might have just a couple of data items about a seismic line, for instance, but, if one is absolutely sure they are accurate, the confidence on these data items is HIGH. Conversely, if all data items defining a well are there but they came from unreliable sources, the confidence rank for this well should be set to LOW or, at the very least, to UNKNOWN. A confidence marker can have the values LOW (red), MEDIUM (yellow), HIGH (green) or UNKNOWN (grey); and every time a user changes a marker, he or she must state a reason and may, if so wanted, add a remark about the change made.

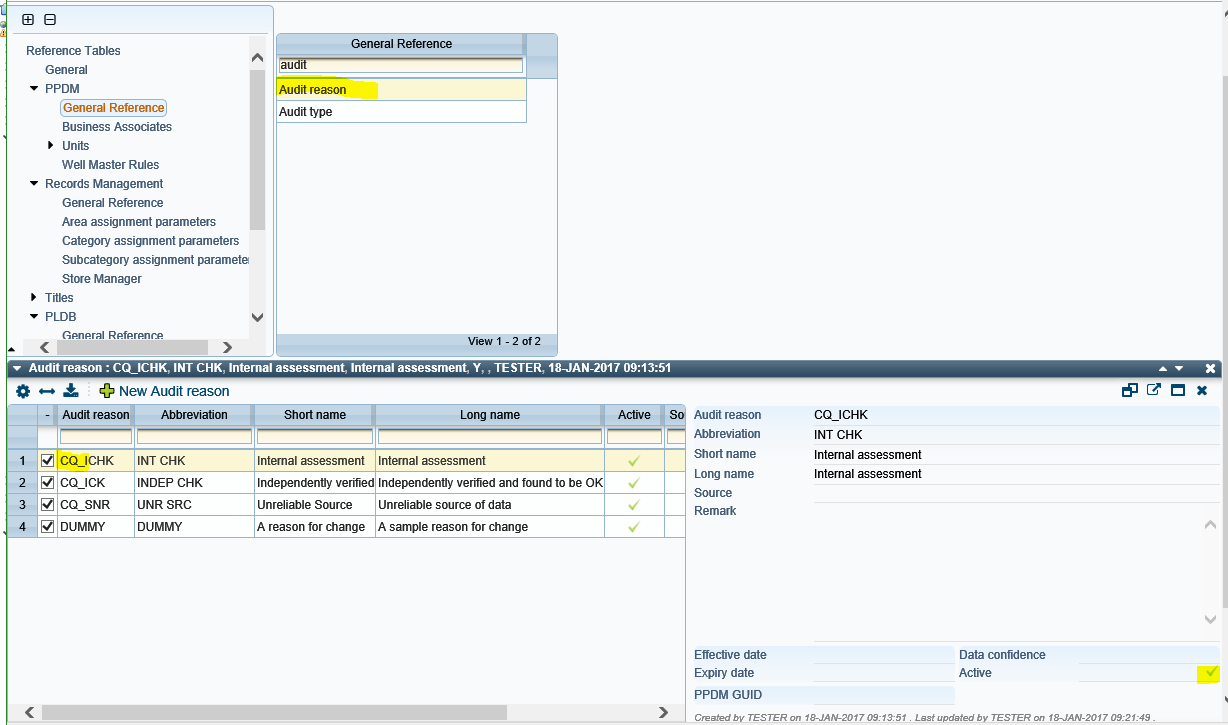

The acceptable reasons can be defined by the user via the ADMIN/ REFERENCE TABLES menu option, by selecting PPDM/ General Reference and the AUDIT REASON table. As this table stores audit reasons for any kind of auditing procedure, you must define your audit reasons as being CQ specific by:

a) Prefixing all audit reason codes with CQ_. If the audit reason code does not start with CQ_ (letters C and Q, followed by an underscore), it will be ignored.

b) Marking the reason as ACTIVE, by checking the corresponding box on the screen. If a reason is not marked as ACTIVE, it will also be ignored.

QUALITY

Quality, on the other hand, is an objective measure, a computed grade. In dbMap/Web, the data quality of an entity can be LOW, MEDIUM, HIGH or UNKNOWN, too, but these are computed according to the quality rules processing results. So, the data quality of the above mentioned seismic line will likely be set to LOW or, in a best case scenario, to MEDIUM, whereas the same measure for the well having all data items defined will likely be HIGH.

It’s perfectly possible, thus, to have any same item bearing a HIGH CONFIDENCE and LOW QUALITY token, or a LOW CONFIDENCE and HIGH QUALITY one.

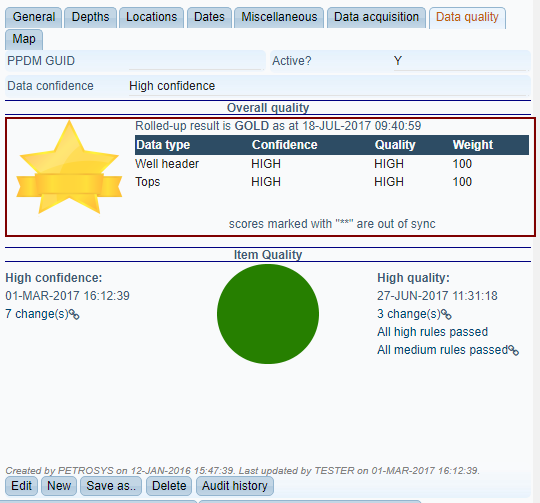

Confidence and quality are also not entirely determined by the data entity alone: there are two measures of both, as you can see by the above screenshot – one dependent only on the item itself, the Item Quality, and another one dependent on the item and its chosen relevant sub-items (or components), the Overall Quality.

The item quality is computed from the rules directly related to the item and from the confidence grade set for the item alone; the overall quality is computed from these and from the confidence and quality results of the subordinate entities. In this context, the item and its sub-items are called ROLL-UP COMPONENTS. If a data entity has roll-up components, an overall score is always computed.

Finally, if the overall score for both confidence and quality is HIGH, the item is said to have achieved a GOLD standard, which is flagged by dbMap/Web via a special token.

Quality Rules Definition

As said, data quality is computed from well defined rules. These rules can be defined via a standard screen, accessible through the Admin → Reference tables section. You are free to define as many rules as you wish, but dbMap/Web comes with a comprehensive rule set for wells and some other data entities.

These rules are a set of SQL (Structured Query Language) queries, and you must have specific knowledge of SQL to define them. That’s why, as a rule, these rules are defined by a systems administrator or a database administrator.

Quality Rules Processing

When dbMap/Web computes item quality in isolation, it takes all item specific (i.e. not roll-up related) rules; executes their queries; and follows a very simple criteria set to determine the final quality score:

If ALL high quality rules pass and ALL medium quality rules pass, the item is HIGH quality.

If any high quality rule fails but ALL medium quality rules pass, the item is MEDIUM quality.

If none of the above is true, the item is LOW quality.

A new record is always assigned UNKNOWN quality until quality computation on it is performed.

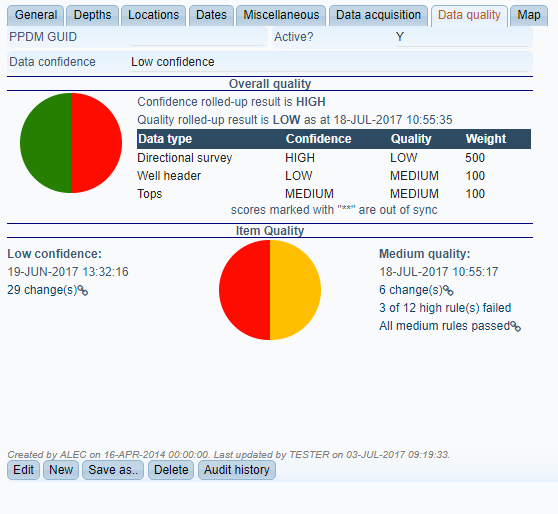

To compute overall scores, dbMap/Web computes a weighted average of scores for the components, considering both quality and confidence separately. The image below illustrates the concept:

In the example above, the big weight of directional surveys made their HIGH confidence and LOW quality scores determine the overall score for the well.

The same record, recomputed with equal weights, produces a different overall result, as shown in the image below

Item quality scores and overall scores are automatically re-computed by the database as the data changes. But re-computation can also be triggered manually, via the “Quality computation” button, which is available, to authorised users, in the list toolbars.